| Time | Activity |

|---|---|

| 13.00 - 13.15 | Opening |

| 13.15 - 13.45 | Keynote by Gabriela Csurka Privacy Preserving Visual Localization |

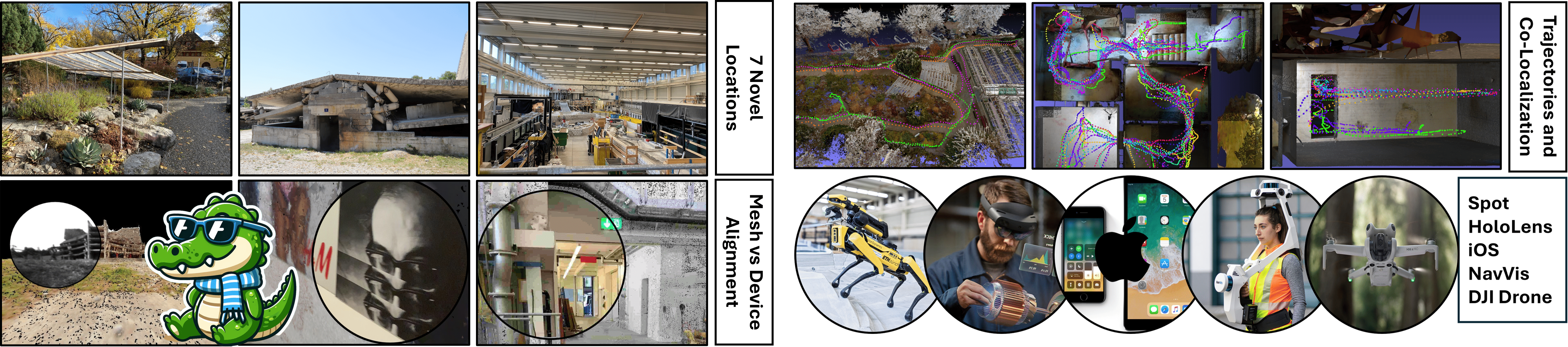

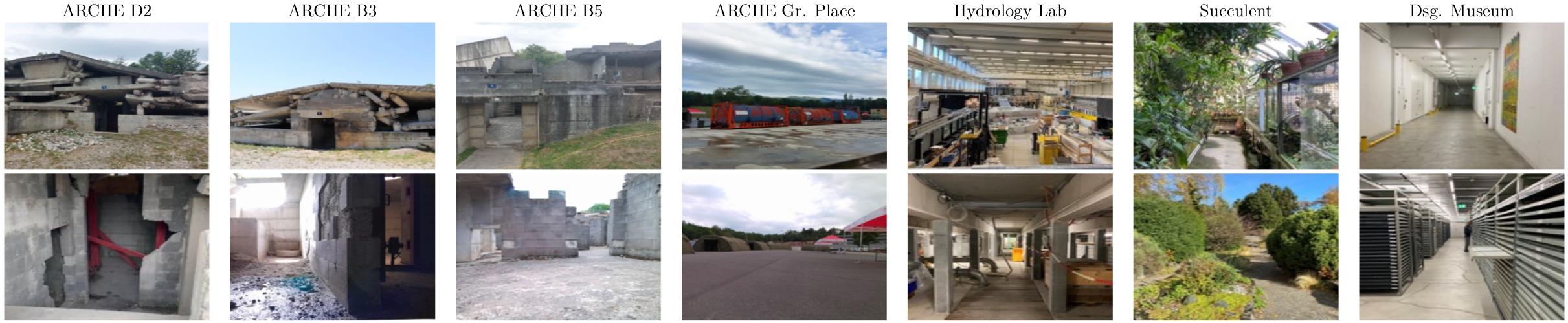

| 13.45 - 14.00 | Introduction of CroCoDL challenge |

| 14.00 - 14.15 | Challenge Winners |

| 14.15 - 14.45 | Keynote by Ayoung Kim Bridging heterogeneous sensors for robust and generalizable localization |

| 14.45 - 15.00 | Highlighted Paper Talk |

| 15.00 - 16.00 | Posters (248-257) and Coffee Break |

| 16.00 - 16.30 | Keynote by David Caruso Current performance of visual-inertial SLAM on egocentric data |

| 16.30 - 17.00 | Keynote by Torsten Sattler Vision Localization Across Modalities |

| 17.00 - 17.05 | Closing |

Sponsored by